2024 is thought to be the “year of AI”, where we will see even more breakthroughs than in 2023.

In this post I will share some of the most interesting books about Artificial Intelligence I have been reading lately, together with my own thoughts:

- Life 3.0

- Superintelligence

- The Coming Wave

- Power and Progress

- Human Compatible

- The Alignment Problem

- Artificial Intelligence: A Modern Approach

Life 3.0

Image by author.

In this book, Tegmark talks about the 3 different tiers of life since the start of the Universe:

- Life 1.0: Simple and biological species. Examples are the little bateria, they can’t design their own software, and also can’t design their own hardware. Both of those are determined by their DNA.

- Life 2.0: Cultural species. The species can’t change their biological body, aka hardware, but they can design its software by learning new skills, new languages, and new ideas. This flexibility has given humans the power and the ability to dominate the planet. But we are not able to change our body at this stage yet. Our brains are largely still not much different from our ancestors thousands of years ago.

- Life 3.0: Technological species. They can design both their own software and hardware, causing an intelligence explosion.

There’s been a lot of debate about what our future, Artificial general intelligence, life 3.0 or whatever you want to call it, will look like.

Few people actually believe in extreme good or extremely bad scenarios where we’ll either all die a few years by AI, or we’ll live in heaven-like world thanks to AI.

Killer robot (generated by GPT4).

Most people actually fall into the two main camps, as Tegmark call them:

- The techno-skeptics who believe AGI is so hard that it won’t happen for hundreds of years, so don’t worry about it now, and;

- The Beneficial AI movement camp who believes human-level AGI is possible WITHIN this century, and a good outcome is NOT guaranteed, we need to work really hard for it.

In the beginning of 2023, there was a heated debate around the Open Letter about “Pausing Giant AI Experiments”, signed by a lot of concerned experts in the AI field.

I did sign the open letter myself, and made some videos to deep dive into the state of AI last year. But reading this book makes me realized that for those techno-skeptics who thought this letter was totally uncessesary, it doesn’t mean that they don’t care about the risks. It just means they have a much longer timeline in mind!

“Fearing a rise of killer robots is like worrying about overpopulation on Mars”. — Andrew Ng

Source: LinkedIn

Likewise, the people who warned about the AI risks are not AI doomers, they just have a closer timeline in mind as to when AGI will happen. How fast things will go, only time will tell!

The book articulates the impact AI has had or is having on different domains such as military, healthcare, finance, and why AI safety is important and deserves more research. There’s also a whole chapter to discuss a wide range of AI aftermath scenarios. Should we have an AI Protector God, or Ensavled God, or a 1984 Survellance kind of world?

My favourite take-away from this book is that asking “What will happen?” is asking the wrong question. A better question is “What should happen?”. We do have the power to influence and shape our future. What future do we want? Will we want complete job automation? Who should be in control of the society? Humans, AI, or cyborgs?

If you enjoy these kinds of discussions and want to have a bird-eye view of all things AI related, I’d highly recommend this book. It’s extremely well-written, easy to read and insightful.

2. Superintelligence

Image by author.

The central idea of this book is that in the grand spectrum of intelligence, the distance between a village idiot and Einstein, is actually quite small. Once the AI passed the chimpanzee and dumb human stages, it could suddenly be much smarter than us.

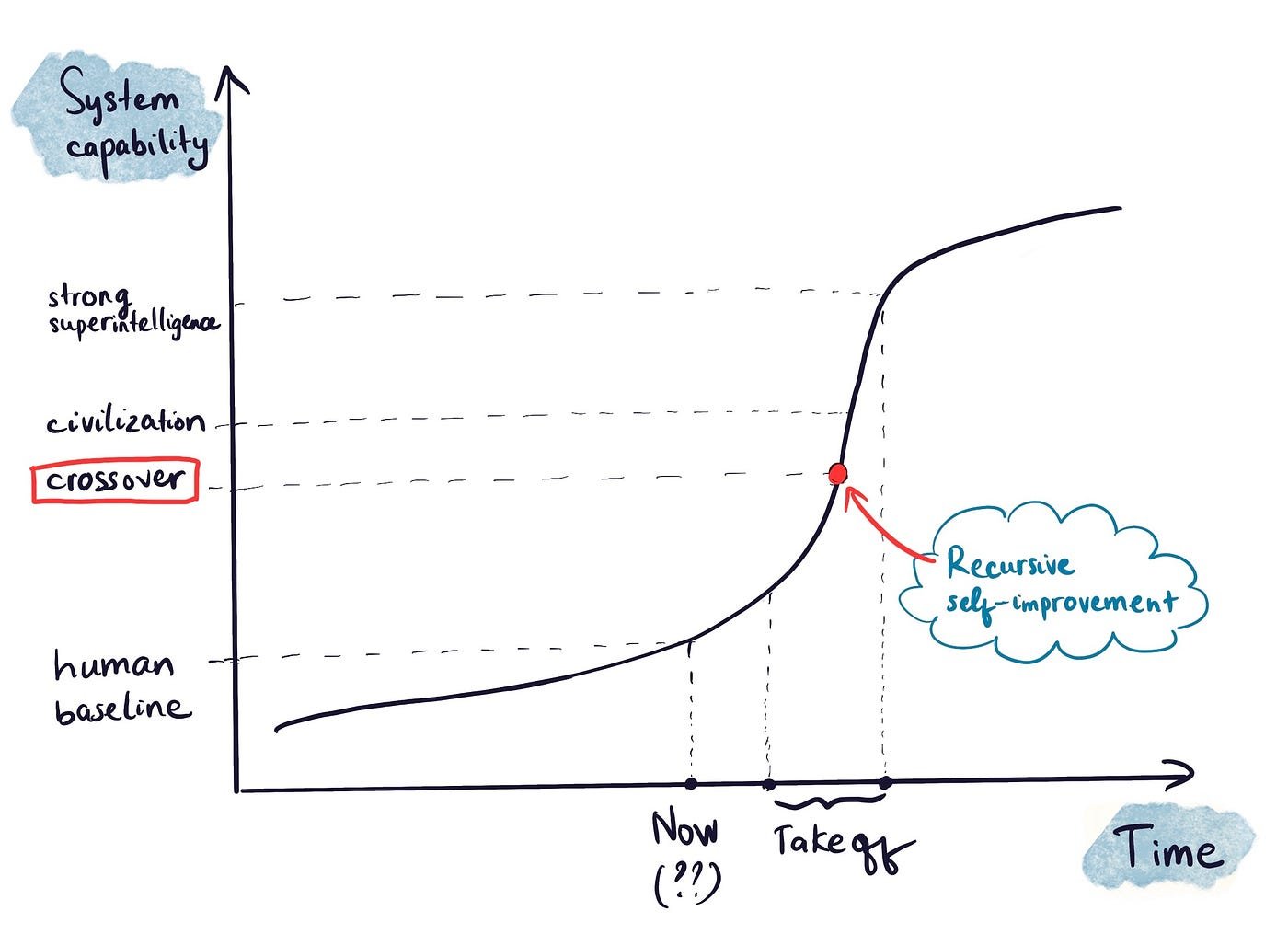

This is why in this book, Nick Bostrom believes that Superintelligence, if it happens, is more likely to be happen fast. It’s more likely to be explosive. There will be a crossover point where machines will start getting smarter by themselves.

Image by author, based on Superintelligence (Nick Bostrom)

Another reason to believe in the intelligence explosion scenario is that advances in Machine Intelligence can benefit from breakthroughs in other fields in rather unexpected ways.

If you are into Machine learning, you may already know that a lot of techniques in computer science and AI actually stem from other fields:

- Human brains → Perceptrons/ Neural networks

- Hierarchical perceptual organization → Convolutional neural nets (CNN)

- Psychological theories of animal cognition → Reinforcement learning

This is not to mention Quantum computing might one day make computing so efficient that machines might be able to continously come up with new ideas and improve themselves recursively.

Another interesting point from this book is that there are actually two different ways to design superintelligent computers.

- Imitate human thinking: This is what we’re currently doing with Generative AI (e.g. GPT4, Gemini models), by teaching computer to “think” like humans through training large neural networks on large amounts of data.

- Whole brain simulation: Get computers to simulate the human brain, not just imitate it. This idea is about building a computer that can learn like a child and will get smarter through interacting with the real world. However, we still know little how our brains and consciousness actually work. And no one knows if this would otherwise be a good idea to simulate human brains without consciousness. Therefore, this approach seems to be a long shot compared to the other route.

This book goes on to discuss why we need to prioritize AI Safety, because it is never a guarantee that a Superintelligent AI would be benevolent. There are many ways a superintelligence might not be aligned with human values. The book discusses a bunch of failure machenisms where this could go wrong. One of them is instrumental convergence:

“An intelligent agent with unbounded but apparently harmless goals can act in surprisingly harmful ways.”

For example, a harmless AI might decide __ to turn us all into paper clips to maiximize production. These scenarios are mostly thought experiments, but they are really fascinating to read and make a lot of sense.

Nick Bostrom also proposes that global collaboration is the key to make AI safe and beneficial. An arms race or some secret government program will more likely lead to bad outcomes.

I think this point hits home even though the book was written more than 10 years ago. It’s a good thing we have so many open-source large language models that everyone can use and contribute to. We can now even download and use an uncensored large language model for free 🤯. These open-source AI models are going to help startups compete and drive progress towards safer and more capable AI systems — provided that everyone has a kind heart and use these models for good!

The Coming Wave

Image by author.

The author of this book, Mustafa Suleyman (Cofounder of Google Deepmind), thinks we are approaching a threshold in human history where everything is about to change, and none of us are prepared.

This book is one of the newest books on AI that also covers recent breakthroughs such as robotics and large language models like ChatGPT.

The book is divided into 4 parts:

- The first two parts talk about the endless proliferation of technology in human history and the containment problem. Technology and inventions come and go like waves, shaping the world we live in, from the invention of printing press, electricity, steam engines, cars, computer to machine intelligence.

There are unstoppable incentives and forces that push the progress — not only financial and political incentives, but also human ego, the human curiousity, the desire to win the race, to help the world, to change the world or whatever it might be. And the author argues that these are the drives we simply can’t stop.

** And what’s the coming wave?** The book talks about the coming wave that includes both advanced AI, quantum computing and biotechnology. This wave of technology is distinguished from the previous waves in human history by a few main measures: It will be happening at an accerlating pace, it will be general-purpose technology just like electricity, but will be much more powerful because it can become autonomous. It can do amazing things for the world, but it can also bring (very) negative consequences.

- The next part of this book discusses the states of failure, basically what the consequences of these technology for the nation-state and democracy. If the state is not able to contain this wave, what will happen?

Reading this chapter makes me realize how fragile is the world we live in. Imagine how new AI technology makes it possible to create the next generation of digital weapons. Sophisticated cyber-attacks could perhaps scrumble today’s financial system and governments that rely on cybersecurity to operate properly.

Imagine the world where deepfakes are everywhere (they kind of are!), spreading false information targeting those want to believe it, harming people involved, causing riots and wars. Other doom scenarios are biological weapons and lethal autonomous weapons. Seeing how the COVID-19 pandemic distabilized economies and turned our lives up side down overnight, it’s chilling to think about how new technologies might make it easier than ever to run experiments and develop new kinds of lethal virus.

Another effect of this coming wave is job automation. Sulleyman wrote in this boo:

“[…] New jobs will surely be created, but they won’t come in the numbers or timescale to truly help.”

Also even if we have new jobs, there might also not enough people with the right skills to do them. He thinks many people will need complete retraining, many people could become unemployed in short term.

- In the last part of the book, the author discuss why containment must be possible, because well, our lives depend on it! He also talks about the 10 steps to make this possible, that require coordination of technical research, developers, businesses, government and the general public.

I find this book really interesting and informative when it comes to the immediate challenges we are facing today due to the advancements of new technologies.

Power and Progress

Image by author.

The next book we are going to talk about is Power and Progress by Daron Acemoglu and Simon Johnson.

This book examines the relationship between technology, prosperity, and societal progress. The authors challenge the popular notion that technological advancement, including AI, automatically leads to progress and shared prosperity. Instead, they argue that technological advancement can often exacerbate inequality. The benefits get largely being captured by a small group of individuals or corporations.

- Workers in the textile factories during the industrial revolution were forced to work long hours in horrible conditions as a small number of rich people captured most of the wealth.

- Similarly in the last decades, computer technologies made a small group of entrepreneurs and businesses become ultra rich. While the poorer part of the population has seen their real incomes actually decline..

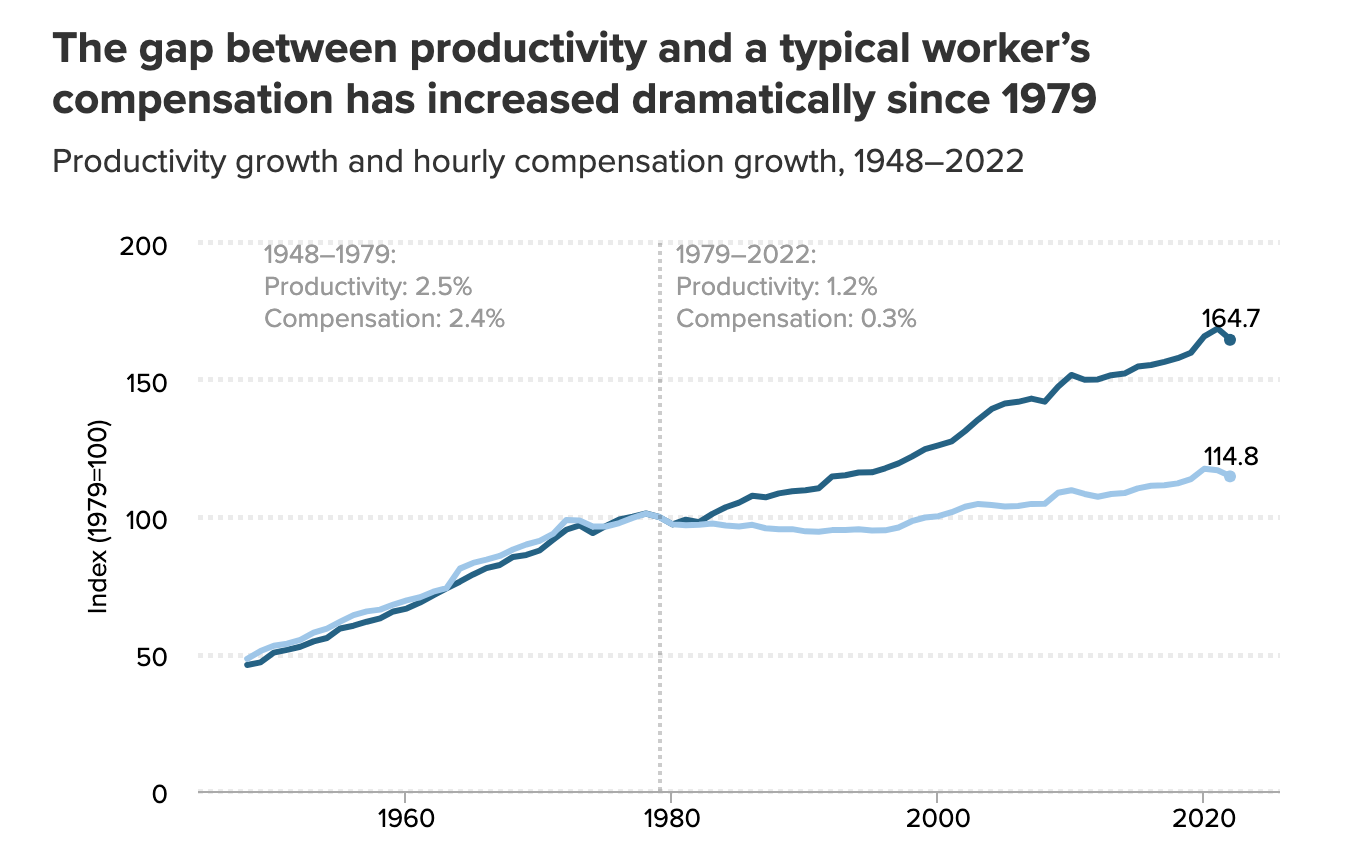

Data tells us that in the last 4 decades, the real wages of goods-producing workers in the US have declined even though productivity has grown.

Source: https://www.epi.org/productivity-pay-gap/

The authors argue that AI technology should focus on automating the routine tasks, just like the ATMs automate bank teller, rather than automating the creative and non-routine tasks from humans. The authors believe that technology should empower us to be ** more productive** , rather than try to replace us completely, if we want the benefits to be distributed more fairly.

The book coins a term “so-so automation” which I find quite interesting. The idea is that a lot of companies seemed to rush to replace workers with machinery and automated AI customer service, only to find out the automation did not work well. The machines just do a poorer job than humans.

Elon Musk once tried to automate everything possible at Tesla. Later he admitted this was a mistake — humans are underrated.

Source: Twitter.

So the book argues that humans are good at most of what they do. We developed sophisticated communication, problem solving, creativity skills over thousands of years. So let’s just let humans do their things, and machines do their things. In other words, this is a case against building Artificial General Intelligence (AGI) that big techs today are after.

Although I’m not sure if I would agree with this point, I can really relate to it. Recently my colleagues and I at work have been working on some Generative AI projects to help companies automate their contact center. So far I have to admit we have had limited success. The language models hallucinate often and are not unreliable enough to be productionalized. I’m thinking, are we rushing it just for the sake of the technology? 🤔

Towards the end of the book, the authors offer a range of recommendations for policies to help redirect technology to a better future for all of us.

Overall, I found this a very thought-provoking book. I’d highly recommend it to those of you who enjoy a more critical discussion on AI advancement, and also if you are into economics and politics, or are working in law-making organizations.

Human Compatible

Image by author.

This book by Stuart Russell talks about how to design intelligent machines that obviously can help us solve difficult problems in the world, while at the same time ensuring that they never behave in harmful ways to humans.

The first part of the book discusses the definition of AI, different ways AI can be misused, and why we should take it very seriously to build superintelligent AI that’s aligned with human goals.

Russel believes:

“Success would be the biggest event in human history, and perhaps the last event in human history”.

He briefly gives answer to the question of when we will solve human-level AI. Russell believes that with the technology we have today, we still have a long way to go. Deep learning is not likely to directly lead to human-level AI:

“[…] deep learning falls far short of what is needed.”

He thinks several major breakthroughs are needed for us to solve human-level AI. One of the most important missing pieces of the puzzle is to make computer understand the hirarchy of abstract actions , the notion of time and space which is needed to construct complex plans and built its models of the world.

Russell gives an example for this: It’s easy to train a robot to standup using Reinforement Learning. But the real challenge is to make the robot discover by itself that standing up is a thing.

In the second part, Stuart Russell dives more into why he thinks that the standard approach to building AI systems nowadays is fundamentally flawed. According to him, we are essentially building systems that are basically optimization machines that optimize on a certain objective we feed into them. They are completely indifferent to human values and this could lead to catastrophic outcomes.

Imagine that we tell an AI system to come up with a cure for cancer as soon as possible. This sounds to be an innocent and good objective. However, the AI decides to come up with a poison to kill everyone so no more people would die from cancer, or maybe it would inject a lot of people with cancer so that it can carry experiments at scale and see what works. It would then be a bit too late for us to say, oh, I forgot to mention a very important thing that people don’t like to be killed.

This book argues that the world is complex; it’s very difficult to come up with a good objective for machine that takes into account all kinds of possible loop holes.

To solve this problem, Russel proposes a new approach, the idea of Beneficial Machines : We should design AI systems that do their best to realize human values and never do harms, no matter how intelligent they are. Russell proposed 3 principles:

- The first principle is that their only objective is to maximize the realization of human preferences. They are purely altruistic, they don’t care about its own well-being or even its own existence.

- The second principle is that the machines are humble and don’t assume it knows everything perfectly, including what objective it should have.

- The third principle is that the machines learn to observe and predict human preferences, for example most humans prefer to live not die, and recognize their preferences even when their actions are not perfectly rational.

The book goes on to prove that these principles should work and it can be mathematically guaranteed.

However, there is not one human but billions of unique humans on Earth. Our preferences could collide and at odds with one another. Russell dedicated a whole chapter in this book to discuss the “complications” to this whole plan, which is humans ourselves.

Overall this book is fun, small but very nuanced book. You’ll find many original ideas and arguments in here. I find it a very important book to read, so highly recommend it!

The Alignment Problem

Image by author.

The next book on the list is “The Alignment Problem — How can AI learn human values” by Brian Christian. This book tackles the issue of making AI systems that are aligned with human values and intentions.

This book will walk you through a tour since the beginning of deep neural networks and talk about all the ways that AI goes wrong and how people have been trying to fix it. I think this book would be particularly interesting and helpful if you’re already somewhat familiar with machine learning/ deep learning. You’ll come across terms like training data, gradient descent algorithm, word embeddings and other data science jargons.

The first part of the book talks about bisases, fairness and transparency of machine learnign models. You’ll get to know almost the entire history of large neural networks and all the names who have contributed to the progress in the last decade.

This chapter also talks about a bunch of mistakes and all kinds of incidence where machine learning went wrong. For example in 2015, Google Photos mistakenly classified black people as Gorrilas. Google realized this is totally not okay and decided to remove this label entirely. It’s so embarrassing that 3 years later, Google photos still refused to tag anything as gorrilas, including real gorrilas. There are more examples with more serious consequences too like model bias in healthcare or justice systems.

I really enjoy the in-depth discussion about what caused these issues and what people actually did to fix them. Also how to remove bias from machine learning models, when the world itself is biased. Or how we can even define fairness while frankly speaking, life is in many ways not fair.

The second part of the book is dedicated to reinforcement learning. Reinforcemnet learning, which is in simple words, to train machines to imitate our behaviors. This is the main idea behind self driving cars, which basically train the machine: Watch how I drive, and do it like this. We’ve definitely seen a lot of success with this.

The book also further discuss other methods like Inverse Reinforcement learning, and RL with Human feedback. In the last chapter, Christian delves into how AI should deal with uncertainty.

Overall, this book is a must-read for anyone interested in the ethical implications of AI and all the challenges in building a fair machine learning system.

Artificial Intelligence: A Modern Approach

Image by author.

Final it would be a mistake if we don’t mention this huge text book “Artificial Intelligence: A Modern Approach” by Peter Norvig and Stuart Russell. It’s a comprehensive textbook that covers all the foundation of an AI agent, from search problem, knowledge representation, logic, planning. We also have a chapter on Machine learning, and a separate one for natural language processing and Computer vision. It’s a staple for anyone studying computer science and AI. It has detailed overview of all the AI concepts. You might think it’s a textbook for people who are students, but it’s actually very accessible and engaging. You do need some basic math understanding and familiar with the mathematical notions.

I wish I had more chances to dive into details of many chapters in this book. If you are learning the technical aspects of AI, it’s a great resource and I’d higly recommend getting this book.

Conclusions

I hope I did justice to these amazing books with this review. These books gives me a more grounded view of AI developments. It’s quite refreshing and for me I have much less angst and whenever I see a headline on AI.