Understanding and Coding the Self-Attention Mechanism of Large Language Models From Scratch

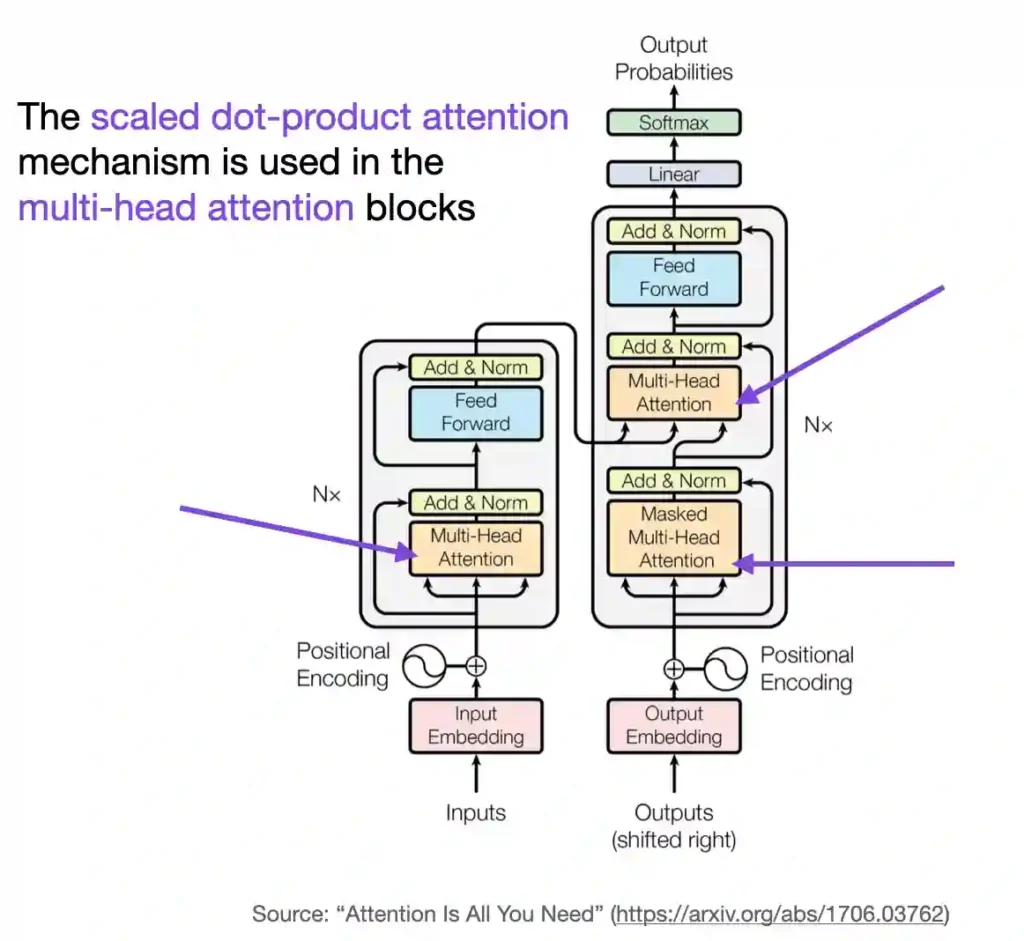

In this article, we are going to understand how self-attention works from scratch. This means we will code it ourselves one step at a time. Since its introduction via the original transformer paper (Attention Is All You Need), self-attention has become a cornerstone of many state-of-the-art deep learning models, particularly in the field of Natural … Continue reading Understanding and Coding the Self-Attention Mechanism of Large Language Models From Scratch