Large Language Models (LLMs) have revolutionized how we interact with and process information. Trained on massive datasets of text, they excel at general knowledge tasks. However, when it comes to specialized domains, their performance can be limited. This is where Retrieval Augmented Generation (RAG) comes in, allowing LLMs to access and utilize relevant documents to answer questions within a specific domain.

This blog post dives into RAFT (Retrieval-Augmented Fine-Tuning), a novel training recipe that enhances the ability of LLMs to perform well in domain-specific RAG tasks. We’ll explore the challenges of adapting LLMs to specialized domains, delve into the details of RAFT, and analyze its effectiveness through evaluation results and qualitative examples.

The Challenge: Adapting LLMs to Specialized Domains

While LLMs shine in general knowledge tasks, their performance can falter when applied to specialized domains like medicine, law, or coding. These domains require specific knowledge that may not be adequately captured in the LLM’s pre-training data.

Two main approaches exist to address this challenge:

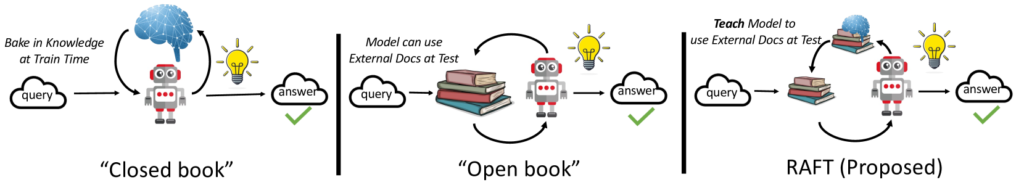

- In-context learning through RAG: This method allows the LLM to reference relevant documents during inference, providing access to new knowledge. However, it doesn’t fully leverage the learning opportunity presented by a fixed domain and early access to test documents.

- Supervised fine-tuning: This approach involves further training the LLM on domain-specific data, enabling it to learn general patterns and align with the desired task. However, existing methods either neglect to utilize documents at test time or fail to account for imperfections in the retrieval process.

The limitations of these approaches can be compared to taking an open-book exam without studying. While the LLM has access to the necessary information, it lacks the preparation and practice to effectively utilize it.

RAFT: Studying for the Open-Book Exam

RAFT bridges the gap between supervised fine-tuning and RAG, effectively preparing the LLM for the “open-book exam” of domain-specific question answering.

Here’s how RAFT works:

- Training data preparation: Each data point consists of a question (Q), a set of documents (D), and a corresponding Chain-of-Thought (CoT) style answer (A*).

- Oracle document (D):* This document contains the information required to answer the question.

- Distractor documents (D_k): These documents are irrelevant to the question.

- Training with a mix of oracle and distractor documents: The LLM is trained on a mix of data points, some containing the oracle document and distractors, and others containing only distractors. This forces the model to learn to differentiate between relevant and irrelevant information.

- Chain-of-Thought reasoning: The answer (A*) includes a CoT explanation, citing specific passages from the relevant document(s) and outlining the reasoning process. This enhances the model’s ability to reason and explain its answers.

By training with this approach, RAFT equips the LLM with the skills to identify and utilize relevant information from the provided documents, effectively preparing it for the open-book exam scenario.

Evaluation: RAFT Outperforms the Competition

RAFT was evaluated on various datasets spanning diverse domains, including:

- Open-domain QA: Natural Questions (NQ), Trivia QA (TQA), and HotpotQA

- Coding/API documentation: HuggingFace, Torch Hub, and TensorFlow Hub from APIBench

- Medical QA: PubMed QA

Across all datasets, RAFT consistently outperformed baseline models, including:

- LLMA2-7B-chat with 0-shot prompting: This baseline represents the standard instruction-finetuned model without access to documents.

- LLMA2-7B-chat with RAG: This baseline utilizes RAG but lacks domain-specific fine-tuning.

- Domain-specific fine-tuning (DSF) with and without RAG: These baselines represent models fine-tuned on the specific domain data, with and without RAG augmentation.

The results demonstrate that RAFT’s ability to leverage both domain-specific knowledge and document context leads to superior performance in domain-specific RAG tasks.

Qualitative Analysis: Understanding RAFT’s Advantage

To illustrate the qualitative difference between RAFT and DSF, consider the example shown in Figure. When asked to identify the screenwriter of a specific film, the DSF model incorrectly cites the film itself, indicating its struggle to extract relevant information from the provided documents. In contrast, RAFT accurately identifies the screenwriter by effectively utilizing the context.

This example highlights the importance of training LLMs not only to align with the desired answering style but also to effectively process and utilize document context. RAFT achieves this by incorporating both aspects into its training recipe.

Conclusion: RAFT – A Powerful Tool for Domain-Specific RAG

RAFT presents a novel and effective approach for adapting LLMs to domain-specific RAG tasks. By training with a mix of oracle and distractor documents, utilizing CoT reasoning, and focusing on document context processing, RAFT equips LLMs with the necessary skills to excel in open-book question answering scenarios. As the use of LLMs in specialized domains continues to grow, RAFT offers a powerful tool to unlock their full potential.

To read summary of more paper like this checkout this page