Latest Posts

-

·

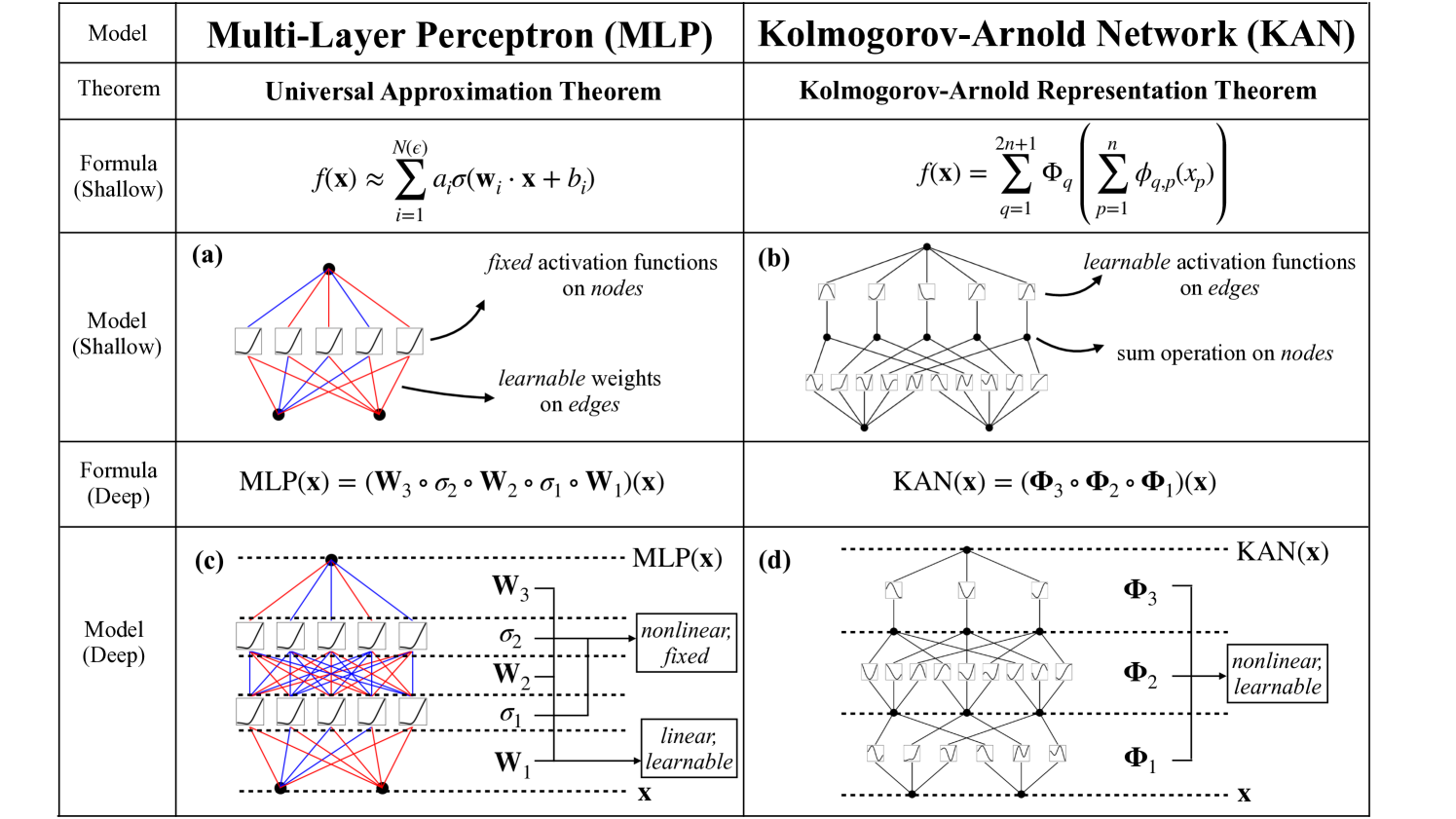

Kolmogorov-Arnold Networks: A Powerful Alternative to MLPs

MLPs come with limitations, particularly in terms of accuracy and interpretability. This is where Kolmogorov-Arnold Networks (KANs) step in, offering a compelling alternative inspired by a powerful mathematical theorem.

-

·

Decoupled Weight Decay Regularization: Bye Bye Adam Optimizer

Boost your neural network’s performance with AdamW! Learn how decoupled weight decay can significantly improve Adam optimizer’s generalization ability, leading to better results and easier hyperparameter tuning.

-

·

ReALM: Reference Resolution with Language Modelling

This blog explores ReALM, a groundbreaking approach by Apple researchers that utilizes language models to resolve references to both conversational and on-screen entities. ReALM outperforms existing systems and achieves accuracy comparable to OpenAI’s GPT-4, paving the way for more natural and intuitive interactions with voice assistants and conversational AI. Click to learn how ReALM is…

-

·

Demystifying LLMs: A Deep Dive into Large Language Models

This blog post will delve into the intricacies of LLMs, exploring their inner workings, capabilities, future directions, and potential security concerns.

-

·

Building a LLM in 2024: A Detailed Guide

This guide delves into the process of building an LLM from scratch, focusing on the often-overlooked aspects of training and data preparation. We’ll also touch on fine-tuning, inference, and the importance of sharing your work with the community.

-

·

LISA: A Simple But Powerful Way to Fine-Tune LLM Efficiently

LISA introduces a surprisingly simple yet effective strategy for fine-tuning LLMs. It builds upon a key observation about LoRA: the weight norms across different layers exhibit an uncommon skewness. The bottom and top layers tend to dominate the updates, while the middle layers contribute minimally.

-

·

QMoE: Bringing Trillion-Parameter Models to Commodity Hardware

This blog post delves into QMoE, a novel compression and execution framework that tackles the memory bottleneck of massive MoEs. QMoE introduces a scalable algorithm that compresses trillion-parameter MoEs to less than 1 bit per parameter, utilizing a custom format and bespoke GPU decoding kernels for efficient end-to-end compressed inference.

-

·

AnimateDiff: Paper Explained

Introducing AnimateDiff, a groundbreaking framework that empowers you to animate your personalized T2I models without the need for complex, model-specific tuning. This means you can now breathe life into your unique creations and watch them come alive in smooth, visually-appealing animations.

-

·

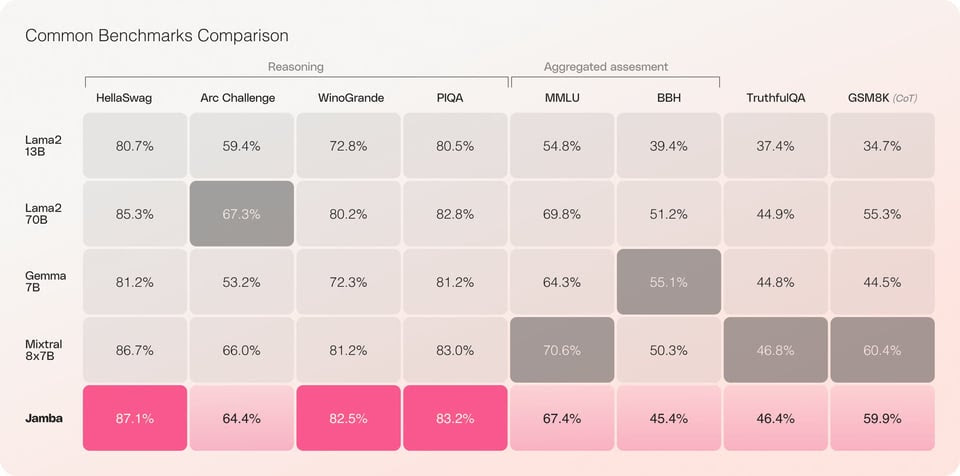

Jamba : A hybrid model (GPT + Mamba) by AI 21 Labs

Jamba boasts a 256K context window, allowing it to consider a vast amount of preceding information when processing a task. This extended context window is particularly beneficial for tasks requiring a deep understanding of a conversation or passage.