Latest Posts

-

·

Top AI/LLM learning resource in 2025

The Blog is organized into three main segments: 📝 Notebooks Below is a collection of notebooks and articles dedicated to LLMs. Tools Notebook Name Description Notebook 🧐 LLM AutoEval Evaluate your LLMs automatically using RunPod. Notebook 🥱 LazyMergekit Merge models effortlessly using MergeKit with a single click. Notebook 🦎 LazyAxolotl Fine-tune models in the cloud…

-

·

Improve ChatGPT with Knowledge Graphs

ChatGPT has shown impressive capabilities in processing and generating human-like text. However, it is not without its imperfections. A primary concern is the model’s propensity to produce either inaccurate or obsolete answers , often called “hallucinations.” The New York Times recently highlighted this issue in their article, “Here’s What Happens When Your Lawyer Uses ChatGPT.”…

-

·

Uncensor any LLM with abliteration

The third generation of Llama models provided fine-tunes (Instruct) versions that excel in understanding and following instructions. However, these models are heavily censored, designed to refuse requests seen as harmful with responses such as “As an AI assistant, I cannot help you.” While this safety feature is crucial for preventing misuse, it limits the model’s…

-

·

Create Mixtures of Experts with MergeKit

Thanks to the release of Mixtral, the Mixture of Experts (MoE) architecture has become popular in recent months. This architecture offers an interesting tradeoff: higher performance at the cost of increased VRAM usage. While Mixtral and other MoE architectures are pre-trained from scratch, another method of creating MoE has recently appeared. Thanks to Arcee’s MergeKit…

-

·

Merge Large Language Models with MergeKit

Model merging is a technique that combines two or more LLMs into a single model. It’s a relatively new and experimental method to create new models for cheap (no GPU required). Model merging works surprisingly well and produced many state-of-the-art models on the Open LLM Leaderboard. In this tutorial, we will implement it using the…

-

·

ExLlamaV2 The Fastest Library to Run LLMs

Quantizing Large Language Models (LLMs) is the most popular approach to reduce the size of these models and speed up inference. Among these techniques, GPTQ delivers amazing performance on GPUs. Compared to unquantized models, this method uses almost 3 times less VRAM while providing a similar level of accuracy and faster generation. It became so…

-

·

Decoding Strategies in Large Language Models

In the fascinating world of large language models (LLMs), much attention is given to model architectures, data processing, and optimization. However, decoding strategies like beam search, which play a crucial role in text generation, are often overlooked. In this article, we will explore how LLMs generate text by delving into the mechanics of greedy search…

-

·

Quantize Llama models with GGUF and llama.cpp

Due to the massive size of Large Language Models (LLMs), quantization has become an essential technique to run them efficiently. By reducing the precision of their weights, you can save memory and speed up inference while preserving most of the model’s performance. Recently, 8-bit and 4-bit quantization unlocked the possibility of running LLMs on consumer…

-

·

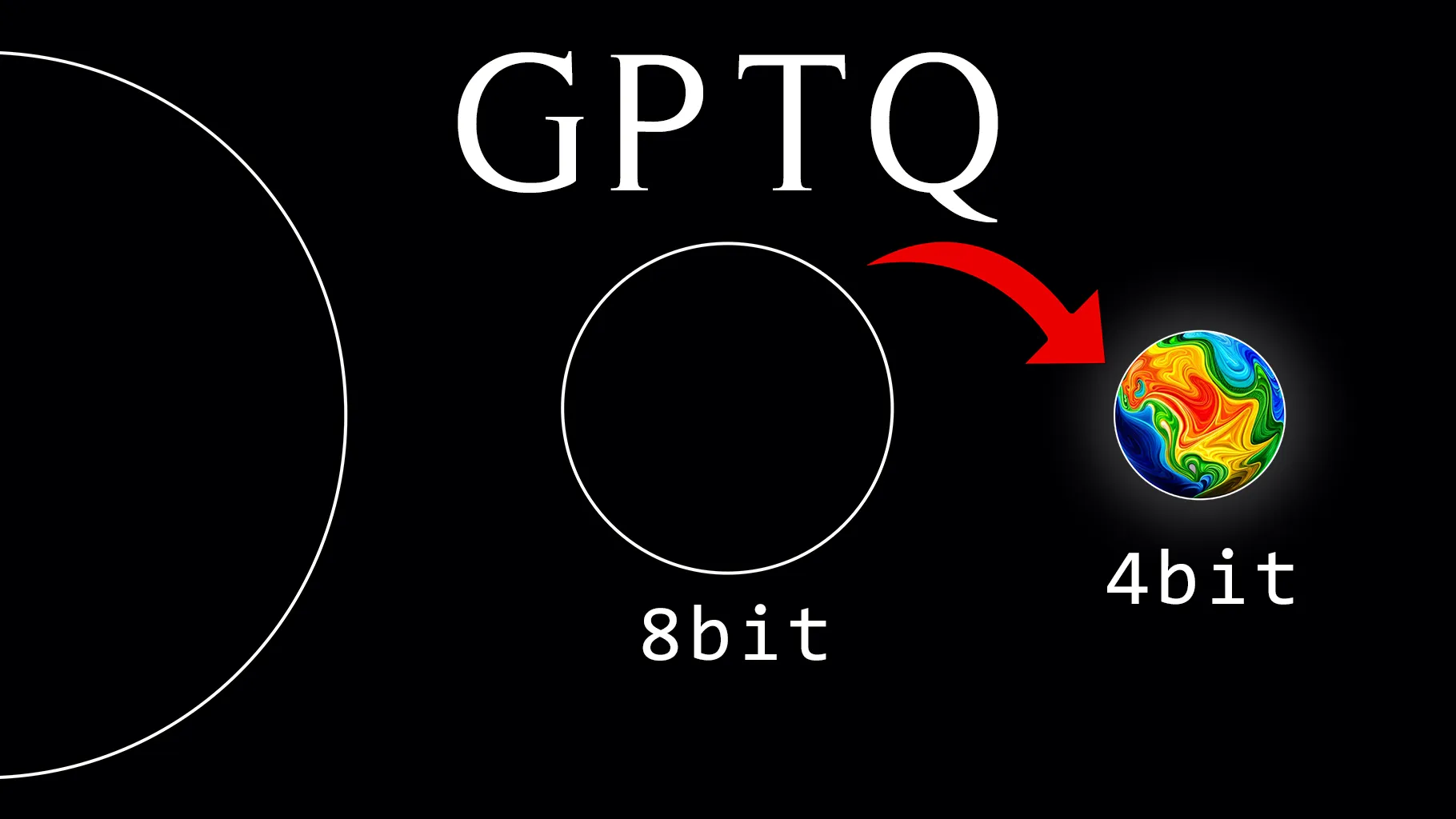

4-bit LLM Quantization with GPTQ

Recent advancements in weight quantization allow us to run massive large language models on consumer hardware, like a LLaMA-30B model on an RTX 3090 GPU. This is possible thanks to novel 4-bit quantization techniques with minimal performance degradation, like GPTQ, GGML, and NF4. 🧠 Optimal Brain Quantization For every layer \( \ell \) in the…