Latest Posts

-

·

From Structured Data to the Unstructured Data Revolution: Navigating the LLM Landscape

The AI field has evolved dramatically. For over a decade, structured data fueled AI value. Supervised and deep learning excelled at labeling, but this approach overlooks a crucial reality: most organizational data is unstructured. This blog post explores the shift towards leveraging unstructured data with Large Language Models (LLMs), addresses the hype around Artificial General…

-

·

Navigating the Real-World Risks of LLM Deployment in the Enterprise

The promise of AI co-pilots augmenting our work is captivating. However, the reality of enterprise AI adoption often presents a different picture. This post explores the practical challenges and solutions for deploying secure and accurate large language model (LLM) applications in real-world business settings. The LLM Landscape and Its Challenges Open-access large language models are…

-

·

Measuring the ROI of AI Coding Tools: Beyond the Hype

The buzz around AI coding tools is undeniable. Promising increased productivity and reduced development costs, these tools are quickly becoming a staple in the software development landscape. But amidst the excitement, a crucial question lingers: how do we actually measure their real impact on our bottom line? This is a challenge many engineering leaders grapple…

-

·

The Democratization of AI: From Demo to Production

The world of Artificial Intelligence is rapidly evolving. AI is no longer confined to academic research or niche tech companies. It’s permeating every industry, from healthcare and agriculture to manufacturing and beyond. This democratization is largely thanks to large language models (LLMs) and conversational interfaces, making AI accessible to a broader audience. But a significant…

-

·

Decoding LLM Inference: A Deep Dive into Workloads, Optimization, and Cost Control

Large language models (LLMs) have revolutionized how we interact with technology. But deploying these powerful models for inference can be complex and costly. This blog post delves into the intricacies of LLM inference, providing a clear understanding of the workload, key performance metrics, and practical optimization strategies. Understanding the LLM Inference Workload At its core,…

-

·

Power of Amazon S3: Transforming Storage into a Data Lakehouse and Avoiding Costly Pitfalls

Amazon S3 is evolving beyond traditional storage, emerging as a robust data lakehouse solution with the introduction of S3 Iceberg Tables and S3 Metadata. In this comprehensive guide, we explore how these innovations leverage the Apache Iceberg open table format to enhance data management, optimize query performance, and integrate seamlessly with a variety of data…

-

·

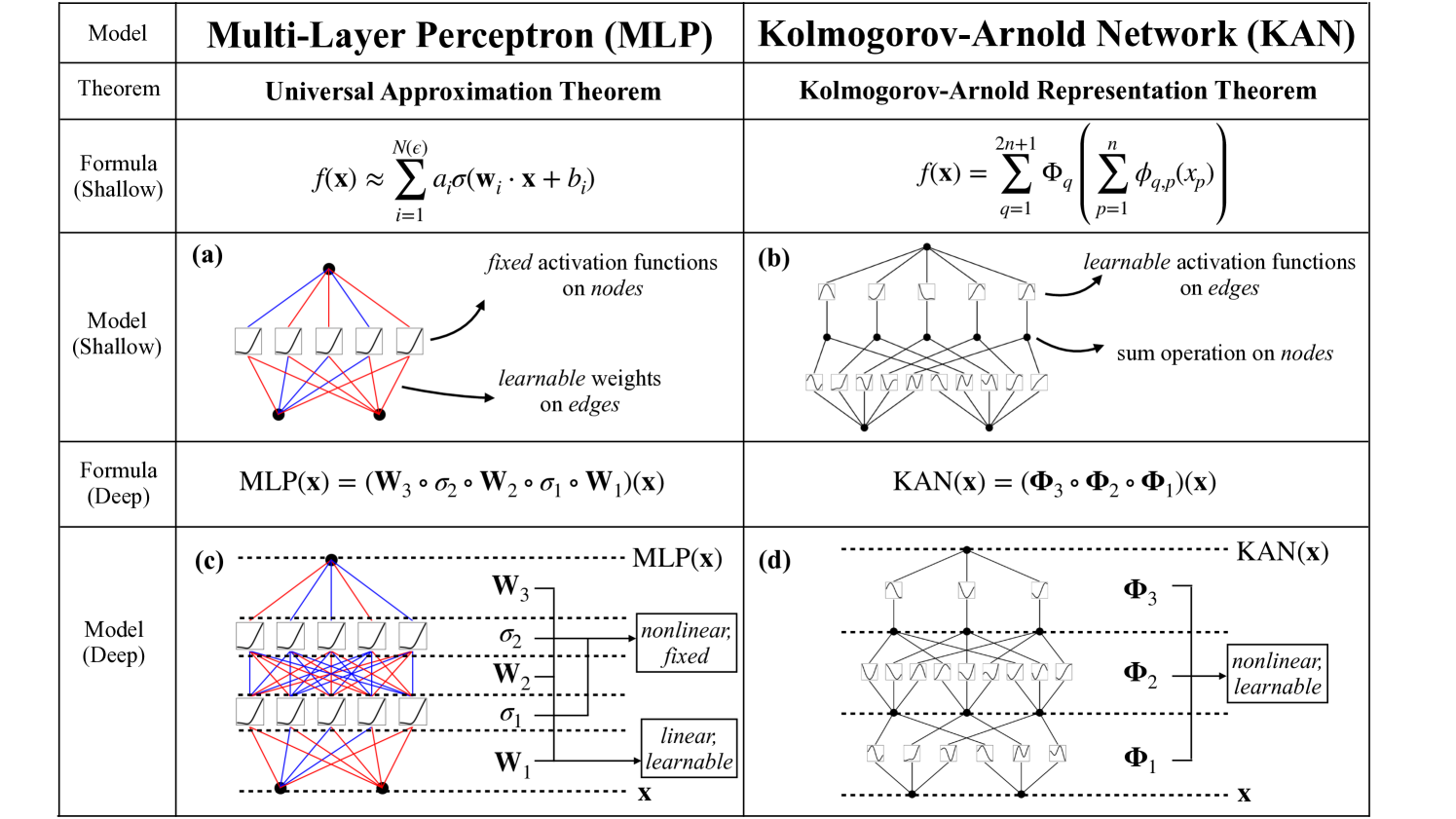

Kolmogorov-Arnold Networks: A Powerful Alternative to MLPs

MLPs come with limitations, particularly in terms of accuracy and interpretability. This is where Kolmogorov-Arnold Networks (KANs) step in, offering a compelling alternative inspired by a powerful mathematical theorem.

-

·

Decoupled Weight Decay Regularization: Bye Bye Adam Optimizer

Boost your neural network’s performance with AdamW! Learn how decoupled weight decay can significantly improve Adam optimizer’s generalization ability, leading to better results and easier hyperparameter tuning.

-

·

ReALM: Reference Resolution with Language Modelling

This blog explores ReALM, a groundbreaking approach by Apple researchers that utilizes language models to resolve references to both conversational and on-screen entities. ReALM outperforms existing systems and achieves accuracy comparable to OpenAI’s GPT-4, paving the way for more natural and intuitive interactions with voice assistants and conversational AI. Click to learn how ReALM is…