Latest Posts

-

·

What Is AUC?

Introduction: What Is the AUC ROC Curve In Machine Learning? AUC, short for a rea u nder the ROC (receiver operating characteristic) c urve, is a relatively straightforward metric that is useful across a range of use-cases. In this blog, we present an intuitive way of understanding how AUC is calculated. How Do You Calculate…

-

·

What Is PR AUC?

AUC , short for area under the precision recall (PR) c urve, is a common way to summarize a model’s overall performance. In a perfect classifier, PR AUC =1 because your model always correctly predicts the positive and negative classes. Since precision-recall curves do not consider true negatives, PR AUC is commonly used for heavily…

-

·

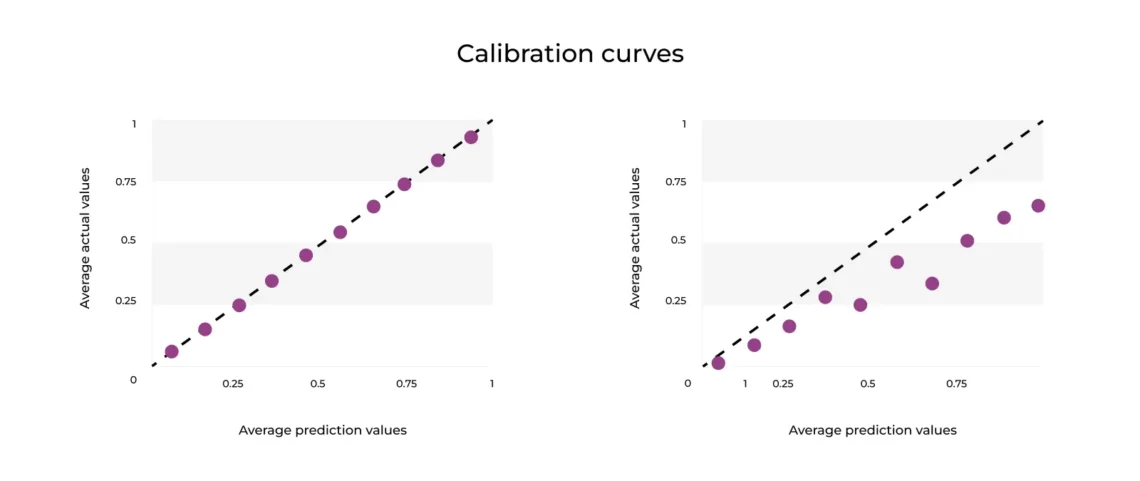

Calibration Curves: What You Need To Know

In machine learning, calibration is used to better calculate confidence intervals and prediction probabilities of a given model. Calibration is particularly useful in areas like decision trees or random forests where certain classifiers only give the label of the event and don’t support native probabilities or confidence intervals. When modelers want to be confident in…

-

·

Understanding and Applying F1 Score: A Deep Dive

F1 score is a measure of the harmonic mean of precision and recall. Commonly used as an evaluation metric in binary and multi-class classification, the F1 score integrates precision and recall into a single metric to gain a better understanding of model performance. F-score can be modified into F0.5, F1, and F2 based on the…

-

·

Recall: What Is It and How Does It Differ From Precision?

In machine learning, recall is a performance metric that corresponds to the fraction of values predicted to be of a positive class out of all the values that truly belong to the positive class (including false negatives). It differs from precision , which is the fraction of values that actually belong to a positive class…

-

·

Precision: Understanding This Foundational Performance Metric

What Is Precision? In machine learning, precision is a model performance metric that corresponds to the fraction of values that actually belong to a positive class out of all of the values which are predicted to belong to that class. Precision is also known as the positive predictive value (PPV). Equation: Precision = true positives…

-

·

The Value of Performance Tracing In Machine Learning

Key Components of Observability In infrastructure and systems, logs, metrics, and tracing are all key to achieving observability. These components are also critical to achieving ML observability , which is the practice of obtaining a deep understanding into your model’s data and performance across its lifecycle. Inference Store – Records of ML prediction events that…

-

·

ML Observability: The Essentials

As more and more teams turn to machine learning to streamline their businesses or turn previously impractical technologies into reality, there has been a rising interest in ML infrastructure tools to help teams get from research to production and troubleshoot model performance. Google built TFX, Facebook built FBLearner, Uber built Michaelangelo, Airbnb built Bighead, and…

-

·

What Is Observability?

In 1969, humans first stepped on the moon thanks to a lot of clever engineering and 150,000 lines of code. Among other things, this code enabled engineers at mission control to have the full view of the mission and make near-real-time decisions. The amount of code was so small that engineers were able to thoroughly…