Latest Posts

-

·

Dual Chunk Attention: Unleash the Power of Long Text

Long-Context Powerhouse: Training-Free Approach Extends LLM Capabilities [Paper] Large language models (LLMs) are revolutionizing various fields, but their effectiveness often hinges on their ability to understand long stretches of text. This is crucial for tasks like analyzing lengthy documents, remembering extended dialogue history, and powering chatbots. While recent advancements have shown success in improving LLMs’…

-

·

Making LLM Efficient: 1-Bit LLMs with BitNet

The world of artificial intelligence is continually evolving, with Large Language Models (LLMs) at the forefront, showcasing extraordinary capabilities across a myriad of natural language processing tasks. Yet, as these models grow in size, their deployment becomes increasingly challenging. Concerns about their environmental and economic impacts due to high energy consumption have pushed the field…

-

·

EMO: A New Frontier in Talking Head Technology

EMO: Emote Portrait Alive – Generating Expressive Portrait Videos with Audio2Video Diffusion Model under Weak Conditions [Ref] [Ref] The landscape of image generation has undergone a seismic shift thanks to the advent of Diffusion Models, a breakthrough that’s redefining the boundaries of digital creativity. These models, distinguished for their prowess in crafting high-fidelity images, are…

-

·

Future of Alignment: Discover the Power of DPO

Constructing ChatGPT The established approach currently is as follows: Source: Chip Huyen Initially, you gather trillions of words from billions of documents, and through a self-supervised process, you prompt the model to forecast the subsequent token (word or sub-word) in a given sequence. Subsequently, you aim to instruct the model to act in a specific…

-

·

Revolutionizing ML: Apple’s MLX Outperforms in Benchmark Tests

In a remarkably short period of under two months since its initial launch, the ML research group at Apple has made impressive progress with their latest innovation, MLX, as evidenced by its rapid acceptance within the ML community. This is highlighted by its acquisition of more than 12,000 stars on GitHub and the formation of…

-

·

Unlock MacBook: Run 70B AI Models Effortlessly with AirLLM 2.8

If you perceive your Apple MacBook solely as a device for creating PowerPoint presentations, surfing the internet, and watching series, then you truly misunderstand its capabilities. Indeed, the MacBook transcends mere aesthetics; its prowess in artificial intelligence is equally astounding. Within the MacBook lies an impressively capable GPU, designed specifically to excel in the execution…

-

·

Unveiling Smaug: The Open-Source LLM King

Abacus.ai has introduced Smaug, an enhanced iteration of Qwen_72B by Alibaba, which unarguably stands as the new sovereign of open-source, being the inaugural open-source model to ever achieve an average score of 80 across various benchmarks. Additionally, it stands as irrefutable evidence that we have at last discovered a definitive method that narrows the divide…

-

·

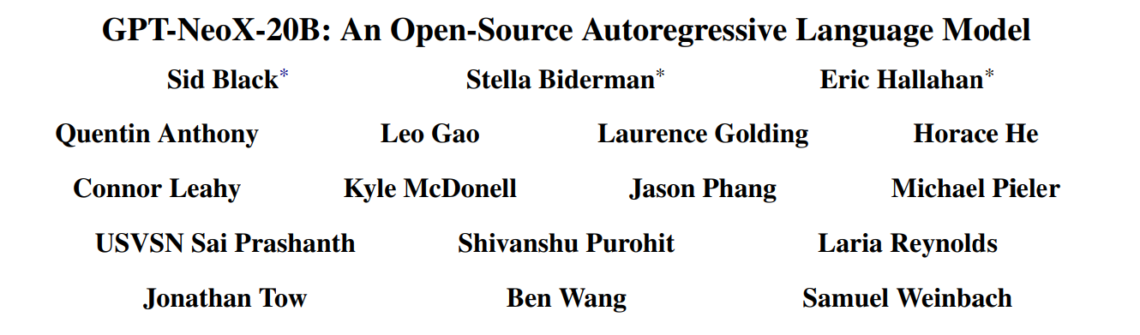

Paper – GPT-NeoX

GPT-NeoX-20B is an autoregressive language model trained on the Pile, and the largest dense autoregressive model that had publicly available weights at the time of submission. Model Architecture GPT-NeoX-20B’s architecture largely follows that of GPT-3 with a few notable deviations. It has 44 layers, a hidden dimension size of 6144, and 64 heads. Rotary Positional…

-

·

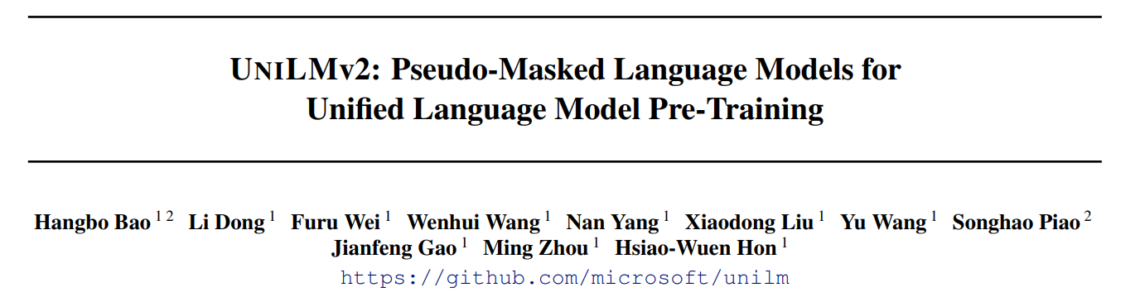

Paper – UniLMv2

UniLMv2 introduces a novel training procedure, PMLM, which enables efficient learning of inter-relations between corrupted tokens and context via autoencoding, as well as intra-relations between masked spans via partially autoregressive modeling, significantly advancing the capabilities of language models in diverse NLP tasks. Overview of PMLM pre-training. The model parameters are shared across the LM objectives.…